Kafka Producer¶

The flir-north-kafka plugin sends data from FLIR Bridge to the an Apache Kafka. FLIR Bridge acts as a Kafka producer, sending reading data to Kafka. This implementation is a simplified producer that sends all data on a single Kafka topic. Each message contains an asset name, timestamp and set of readings values as a JSON document.

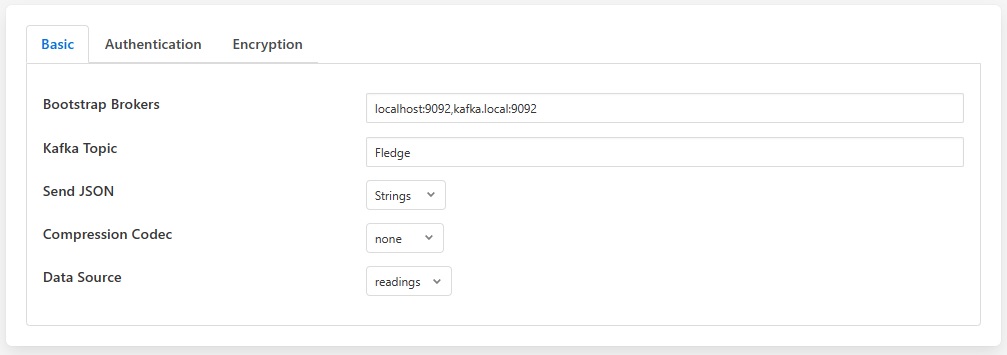

The configuration of the Kafka plugin is very simple, consisting of four parameters that must be set.

|

Bootstrap Brokers: A comma separate list of Kafka brokers to use to establish a connection to the Kafka system.

Kafka Topic: The Kafka topic to which all data is sent.

Send JSON: This controls how JSON data points should be sent to Kafka. These may be sent as strings or as JSON objects.

Compression Codec: The compression codec to be used to send data to the Kafka broker. Supported compression codecs are; gzip, snappy, lz4 or none. The default value is none, in which case no compression will take place. Plugin will send data with no/previous compression in case of any failure to set compression codec.

Data Source: Which FLIR Bridge data to send to Kafka; Readings or FLIR Bridge Statistics.

|

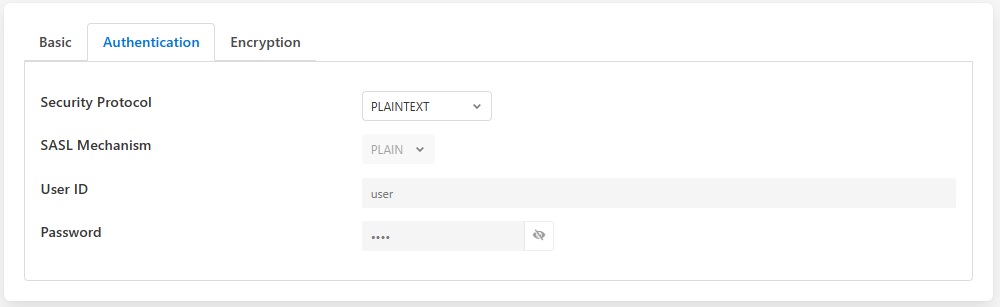

Security Protocol: Security protocol to be used to connect to the kafka broker.

SASL Mechanism: The authentication method to be used. The supported mechanisms are PLAIN, SCRAM-SHA-256 and SCRAM-SHA-512.

User ID: The User ID to use when the SASL Mechanism is set to SASL_PLAINTEXT or SASL_SSL.

Password: The Password to use when the SASL Mechanism is set to SASL_PLAINTEXT or SASL_SSL.

|

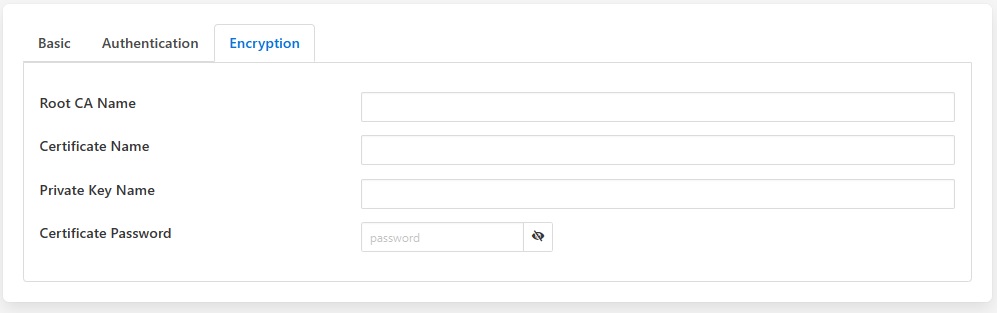

Root CA Name: Name of the root certificate authority that will be used to verify the certificate.

Certificate Name: Name of client certificate for identity authentications.

Private Key Name: Name of client private key required for communication.

Certificate Password: Optional: Password to be used when loading the certificate chain.

All the certificates must be added to the certificate store within FLIR Bridge.

Sending To Azure Event Hub¶

The Kafka plugin can be used to send data to the Azure Event Hub, configured with Shared Access Signature (SAS); This will require the following configuration settings,

Bootstrap Brokers: Azure event hub endpoint

Topic: Azure event hub name

Security Protocol: SASL_SSL

SASL Mechanism: PLAIN

User ID: $ConnectionString

SSL Certificate Password: Must be set blank